vcracing

Overview

You can drive it manually, and also with machine learning. “vcracing” is a car-racing game. Unlike OpenAI Gym’s “CarRacing”, vcracing is designed for machine learning as below.

- Course status (environment) are observable.

- Easy save and duplicate of environment.

We tried modifying CarRacing of OpenAI Gym (→the original)for machine learning(→the article), but I became totally different. So we released it by own.

Since the original could only observe “step reward” and “episode end flag”, autonomous driving with machine learning was almost impossible. vcracing can watch course information and car status, which allows generalized learning for random made courses.

In addition, the original is definitely unable to “save” and “duplicate” environments, so it cannot branch off in the middle to do distributed searches, resulting in waste of learning time. vcracing can save and replicate environments with copy.deepcopy() to respond to a variety of learning strategies.

Installing

pip install vcracingSample code

from vcracing import vcracing

# Load game

env = vcracing()

# See starting image

env.render()

# Drive

for i in range(30):

# Chose action

# [Steer, Accel] where Steer in [-1, +1], Accel in [-1, +1]

# Note that Steer>0 turns LEFT, Accel<0 reverse gear

action = [0.1, 1] #[Steer, Accel]

# Step

state, reward, over, done = env.step(action)

#env.render() # See every step image

# See current image

env.render()Instant playing manual

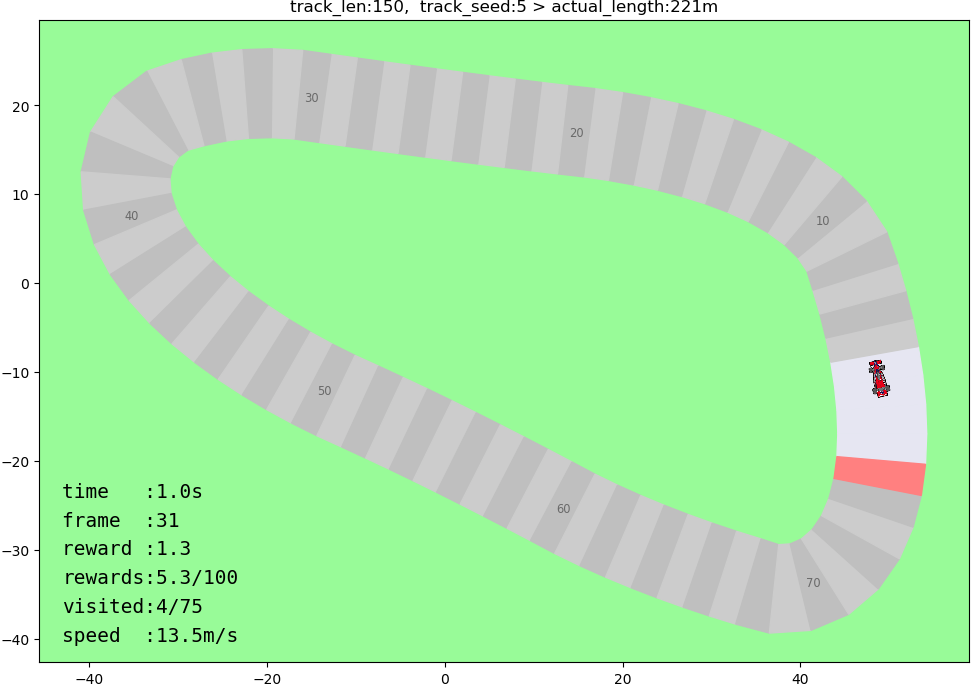

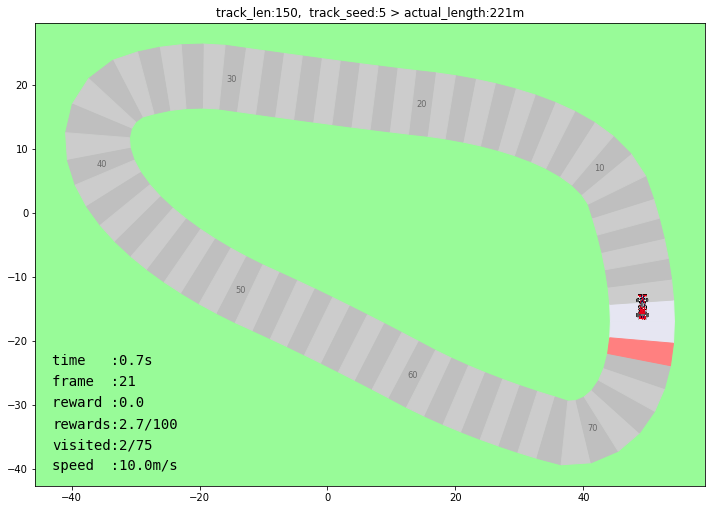

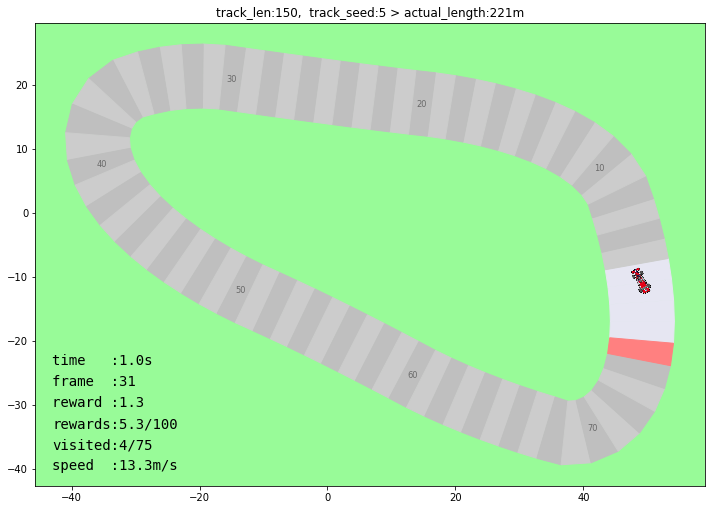

Straight acceleration with [0, 1] and slightly turn left with [0.2, 1]. Course consists of n peace of road panels, and each panel you step on gives you (100/n) points of reward. state[‘rewards’]==100 or done==True means complete.

env=vcracing(track_seed=1) allows you to create deterministic course. state=reset() to reset the environment without changing course.

Note that sharp turning will greatly reduce speed, so gentle turning is essential to achieve good record.

Example of manual driving

You can explore play() one by one as below. By checking the last two frames to adjust play(), you may easily reach the goal even manually.

# Deterministic course

env = vcracing(track_len=200, track_seed=5, car=None)

# Stepping function from "action" and "steps"

def play(action, step):

for i in range(step):

_, _, _, _ = env.step(action)

#env.render()

# Play one by one

play([0, 1], 10)

play([0.35, 1], 8)

play([0, 1], 20)

play([0.8, 1], 12)

play([0, 1], 75)

play([0.35, 1], 65)

play([0, 1], 95)

play([0.35, 1], 60)

play([0, 1], 24)

# Checking the last 2 frames

for i in range(2):

action = [0, 1]

_, _, _, done = env.step(action)

print(done)

env.render()Specification

Load game and reset

This is where the course is automatically generated and the car image selected. You can specify an approximate length of the course and a random seed.

When you reset game without changing the course , we recommend reset() as next section.

env = vcracing(track_len=200, track_seed=None, car='BT46')| track_len | int | Approximate length of the automatically generated course (in meters). Roughly 200 to 3000 is recommended. The longer the course, the more complex it tends to be. |

| track_seed | int | It is possible to fix the random number seed when the course is automatically generated. The same course can be generated by using the seed value displayed at the top of the rendered image, even if the course is randomly generated. The contest may specify the course by seed value. |

| car | str | Currently, five types of car are available. There is no difference in driving performance in any of them. ‘BT46’ ‘P34’ ‘avro’ ‘novgorod’ ‘twinturbo’ |

Accelerate with a fan!? “Brabham BT46”

Phantom six-wheeler “Tyrrell P34”

Love refueling! “Avro Vulcan”

The recoil from the shelling spins around “Novgorod”

A great horse with turbojets “Twin Turbo”

Reset game

Reset game without changing the course. There is no option. The return value is an array of dictionary types filled with variety of information (see env.step() below).

state = env.reset()Choose action

Consists of two values, steer and accel.

action = [0, 0] #[Steer, Accel]| Steer | float | [-1.0, +1.0] | This is how you apply force to the handle. Steer>0 will try to turn the left direction. Note, however, that due to the inertia, even if 0 is entered during turning, car will not stop the turning immediately. Also, you cannot turn while the car is stopped. A sharp turn will greatly reduce speed. Just for reference, it seems to turn about 180 degrees per second if you keep applying ±1.0. |

| Accel | float | [-1.0, +1.0] | This is how you apply force to the accelerator pedal. Accel>0 means forward and <0 means reverse. Note, however, that because of the inertia, even if you enter -1 while moving forward, car will not immediately move backward. It also has a resistance force, so even if you keep applying 0 while driving, it will eventually stop. For reference, the top speed seems to be around 25 m/s. |

Step

Enter the action to step the game by one frame. There are four return values. Especially the state is full of information that can be used for machine learning.

state, reward, over, done = env.step(action)| state | dictionary | (See the table below.) |

| reward | float | A reward for this step. The moment you step on a new road panel, you get a reward (100/total panels). Usually got 0 or (100/total panels) point. |

| over | bool | Returns True if the car is off-screen. It is assumed that in reinforcement learning you will end the episode with a penalty. |

| done | bool | Returns True if all the road panels have been visited, meaning the game is clear. It is assumed that in reinforcement learning you will end the episode with a big reward. |

“state” consists of the following.

| state[‘position’] | [float, float] | Coordinates of the car [x, y] |

| state[‘velocity’] | [float, float] | Speed vector of the car [x成分, y成分] |

| state[‘radian’] | float | Direction of the car. How far to the left in relation to the top of the screen (unit: radians) |

| state[‘radian_v’] | float | Rotation speed of the car. A positive value is a left rotation. |

| state[‘road’] | ndarray | Coordinates of all road panels. shape=(total number of panels, 4, 2), where 4 means the four corners of a panel and 2 means [x, y] |

| state[‘visited’] | bool配列 | Whether or not the car visited each panel. True if it has been stepped on. Conquer the course with all True |

| state[‘visited_count’] | int | The total number of visited panels, same as np.sum(state[‘visited’] |

| state[‘time’] | float | In-game time. Start from 0 s. The game is 30 fps |

| state[‘frame’] | int | In-game frame value. Start with 1. The game is 30 fps |

| state[‘rewards’] | float | The total value of the rewards so far, and when you reach 100, you will conquer the course. |

| state[‘actual_length’] | int | Actual length of the course. It does not necessarily match the value specified by track_len. |

| state[‘speed’] | float | Speed of the car, means the norm of state[‘velocity’] |

| state[‘road_max’] | [float, float] | The large side of the screen’s edge [x component, y component] |

| state[‘road_min’] | [float, float] | Smaller side of the screen’s edge [x component, y component] |

Rendering an image

You can see the entire course at the moment. Due to the slow processing speed, it is recommended to check only once every few frames.

env.render(mode='plt', dpi=100)| mode | str | ‘plt’ plt.show() of matplotlib is executed and the image is displayed in the console. ‘save’ ./save folder is automatically generated and an image is saved in the folder. The image name is given a sequential number such as “00000001.png” according to the frame number. |

| dpi | int | Resolution of the image (dot per inch). |

An example of an image.

Saving and duplicating environments

You can easily duplicate an environment using copy.deepcopy() as follows.

from vcracing import vcracing

from copy import deepcopy

# Generate course

env = vcracing(track_len=200, track_seed=5)

# 20 frames go forward

for i in range(20):

action = [0, 1]

_, _, _, _ = env.step(action)

# Check image

print('env')

env.render()

# Copy env as "env2"

env2 = deepcopy(env)

# Turn left in env

for i in range(10):

action = [1, 1]

_, _, _, _ = env.step(action)

# Turn right in env2

for i in range(10):

action = [-1, 1]

_, _, _, _ = env2.step(action)

# Check images

print('env')

env.render()

print('env2')

env2.render()

Copying moment

env turning left

env2 turning right

(For developers) Obtaining driving records

After finish, you can get the driving records as follows.

record = env.get_record()

# All inputs

print(record['input_all'])

# All car positions

print(record['position_all'])

# All car radians

print(record['radian_all'])

# Lap time

print(record['lap_time'])License

Reference source

This package has been developed with reference to OpenAI Gym CarRacing-v0. We would like to express my respect and appreciation for the efforts of all those involved.

License

Private Use, Commercial Use are permitted. Don’t forget Copyright notice (e.g. https://vigne-cla.com/vcracing-tutorial-en/) in any social outputs. Thank you.

For Educational Use, Copyright notice is NOT necessary.

Required: Copyright notice in any social outputs.

Permitted: Private Use, Commercial Use, Educational Use

Forbidden: Sublicense, Modifications, Distribution without pip install, Patent Grant, Use Trademark, Hold Liable.

Disclaimer

We will not compensate you for any damage caused by the use of vcracing.

Coming update

render() improvement

Update

| 1.0.8 | step() speed has been 10-60x faster, constant regardless of the course length get_record() implemented Course length adjusted Display lap time on the image |

| 1.0.6 | Release |